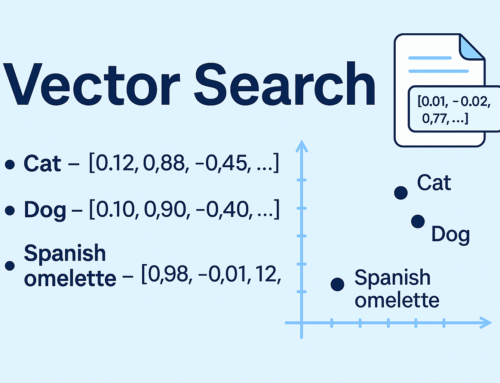

In the first part of this series, we looked at how to enrich your data with meaning by generating embeddings using OpenAI’s API. We stored those vectors in our Oracle APEX app, laying the groundwork for a smarter kind of search — one that understands concepts, not just words.

Now it’s time to go a step further.

In this follow-up, we’ll explore how to actually use those vectors to find relevant content based on meaning. At the heart of it is Oracle’s VECTOR_DISTANCE function — a powerful tool that lets us measure how semantically close two pieces of text are.

This means we’re no longer relying on LIKE '%word%' queries that only work when users guess the right terms. Instead, we compare the essence of what the user is searching for with the embedded meaning of the data we’ve stored.

We’ll walk through how to implement this inside a simple SQL query, hook it up to a dynamic search field in APEX, and deliver results ranked by conceptual similarity — all without needing any external service calls at query time.

Let’s build search that understands what users mean, not just what they type.

The APEX builder screen shows the setup of a semantic search page, including a search input, dynamic actions, and a Classic Report with a SQL query using VECTOR_DISTANCE for meaning-based results.

this is the interface we designed to begin experimenting with semantic search in Oracle APEX. It’s clean and minimal: a single input field where users can type a descriptive phrase, a “Search” button, and a classic report that displays the results. Each result includes the artwork’s title, description, MIME type, and a column for the semantic distance — which we’ll explain shortly.

The semantic search page displays a list of artworks ranked by conceptual similarity, with columns for title, description, MIME type, and semantic distance — ready for user input to activate the vector-based ranking.

In this screen, we’re still seeing a distance of zero — that’s simply because no search has been performed yet. But that’s about to change. Now comes the interesting part: setting up the logic that will turn a user’s input into meaning, and that meaning into a ranked list of results. Let’s take a look at how we can wire everything together to bring semantic search to life — using just a bit of SQL, a dash of OpenAI, and some APEX wizardry.

What we’re really searching through here are the descriptions. That’s the field we’ve sent to OpenAI to generate embeddings for, and it’s those vectors that live in our database. So when a user types something into the search box, we’re not looking for matching words — we’re comparing the meaning of their input to the meaning of each description. That’s where the semantic search actually happens.

So, how do we actually make this work? Let’s take a look at the SQL I used to build the Classic Report. This is where the core of the semantic search logic lives — the part that takes the user’s input, turns it into a vector, and compares it to the ones we’ve already stored.

It starts out simple — we’re selecting the usual fields: ID, title, description, and MIME type. But the key part is the DISTANCE column. That’s where we calculate how close each stored embedding is to what the user typed in.

If the search field is empty, we just return 0 to keep things from breaking. Otherwise, we use apex_ai.get_vector_embeddings to generate a vector from the user’s input, and then we compare it with the stored vector using VECTOR_DISTANCE.

The results are ordered by that distance — smaller values mean closer matches. So the most relevant entries float to the top, even if the wording is completely different.

Now let’s take a look at what happens in the results when we perform a search.

After searching for “lighthouse”, the app displays a ranked list of artworks based on semantic distance. Entries closer in meaning appear at the top, demonstrating how vector-based search understands concepts rather than exact words.

Once the user types in a search term — in this case, “lighthouse” — the real strength of semantic search begins to show. Behind the scenes, the system generates a vector based on the input and compares it with the vectors already stored in the database. What you see in the screenshot is the outcome of that comparison: a ranked list of artworks, not based on exact keyword matches, but on conceptual proximity.

The Distance column, highlighted in red, reflects how closely the meaning of each description aligns with the user’s query. A lower number means a closer match. So even if the word “lighthouse” doesn’t appear verbatim, entries that carry similar ideas — solitude, guidance, the sea — naturally rise to the top.

That’s the essence of semantic search: it doesn’t just look at what users type, it tries to understand what they mean. With Oracle’s VECTOR_DISTANCE function and the power of pre-generated OpenAI embeddings, we’re stepping into a smarter, more intuitive way to connect people with content.

With this implementation, we’ve crossed an important threshold — moving beyond literal searches into a space where context, intent, and true meaning take center stage. This is just the beginning of what semantic search can bring to the Oracle APEX ecosystem. To learn more about this and other innovative solutions, feel free to visit our company website, where we share resources, practical examples, and next steps to help take your applications to the next level.

Leave A Comment